Blog

All Blog Posts | Next Post | Previous Post

Add AI superpower to your Delphi & C++Builder apps part 6: RAG

Add AI superpower to your Delphi & C++Builder apps part 6: RAG

Monday, June 2, 2025

This is part 6 in our article series about adding AI superpower to your Delphi & C++Builder apps. In the first 5 installments, we covered:

- Introduction to using LLMs via REST API

- Function calling from LLMs

- Multimodal LLM usage

- MCP servers

- MCP clients

In this new episode, we will explore RAG. RAG stands for Retrieval Augmented Generation. Let's turn to AI to get us a proper definition of RAG:

Definition

RAG stands for Retrieval-Augmented Generation. It's a technique in AI that combines two key components:

- Retrieval: Finding relevant information from external sources (like databases, documents, or knowledge bases)

- Generation: Using a language model to create responses based on that retrieved information

Here's how it works: Instead of relying solely on what a language model learned during training, RAG systems first search for relevant information from external sources, then use that information to generate more accurate and up-to-date responses.

Why RAG is useful:

- Provides access to current information beyond the model's training data

- Reduces hallucinations by grounding responses in actual sources

- Allows models to work with private or domain-specific information

- Enables citation of sources for transparency

RAG with TTMSMCPCloudAI

So, how can we use RAG when using the TTMSMCPCloudAI component to automate the use of LLMs. This is possible in two ways:

- We add data to the context when sending the prompt text

- We take advantage of files we can store in the account we use for the cloud LLM service

If we want to reuse existing data, clearly method 2 is a preferred method. It avoids we have to send data along with the prompt over and over again. We can upload the files once and refer to the files by the ID given by the cloud LLM service along with the prompt. The reality at this moment is that from the cloud LLMs supported by TTMSMCPCloudAI, at this moment OpenAI, Gemini, Claude and Mistral have support for file storage. While Mistral documents it supports it, we have found so far not a way to succesfully use their API. Even with their provided CURL examples and using CURL it fails. So, we focused for now on OpenAI, Gemini and Claude. Later more on how to use it.

RAG with data sent along with the prompt

There is fortunately broad support for using this mechanism along the supported cloud LLMs. It is also simple to use. The TTMSMCPCloudAI component has the methods:

- AddFile()

- AddText()

- AddURL()

AddFile() allows use to send data like text files, PDF files, Excel files, image files, audio files along with the prompt* (supported file types different from LLM to LLM at this moment, check the service to verify the supported types). For binary files, this data is typically base64 encoded and sent along with the prompt.

AddText() sends additional data with the prompt as plain text.

AddURL() sends a hyperlink to data, which can be a link to an online text document, image, PDF, etc... and also here again, to be checked with the LLM what is supported.

RAG with uploaded files

Another approach is to send the files separately to the cloud LLM (for those cloud LLMs who have this capability) and refer to this data (multiple times) via an ID. At this moment, we have implemented this for OpenAI, Gemini and Claude.

To have access to files, the TTMSMCPCloudAI component has the .Files collection. Call TTMSMCPCloudAI.GetFIles to retrieve all previously uploaded file information via the .Files collection. Call TTMSMCPCloudAI.UploadFile() to add files to the cloud LLM. To delete a file, use TTMSMCPCloudAI.Files[index].Delete.

When the files collection is filled with the files retrieved from the cloud LLM, the ID's of the available files is automatically sent along with the prompt and thus, the LLM can use it for retrieval augmented generation.

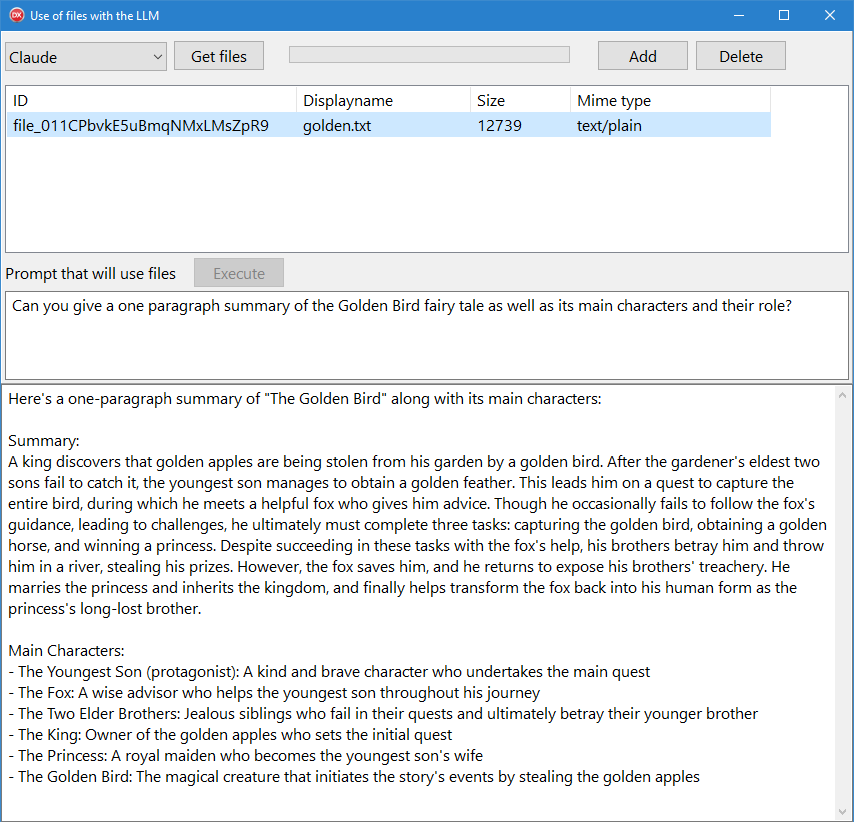

With following example, we assume that the concept becomes much clearer right-away:

We uploaded to the cloud LLM a text file containing the story of The Golden Bird by the Brothers Grimm. With this story added, we can query the LLM for the information we want from this fairy tale.

What you see in the screenshot of the demo is the list of files that was uploaded to the cloud LLM, here Claude. Then you can see the prompt that queries for a summary and main characters of the fairy tale.

The code used for this is as simple as:

// send the prompt

TMSMCPCloudAI1.Context.Text := memo1.Lines.Text;

// get the response

procedure TForm1.TMSMCPCloudAI1Executed(Sender: TObject;

AResponse: TTMSMCPCloudAIResponse; AHttpStatusCode: Integer;

AHttpResult: string);

begin

ProgressBar1.State := pbsPaused;

if AHttpStatusCode = 200 then

begin

memo2.Text := AResponse.Content.Text;

end

else

ShowMessage('HTTP error code: '+AHttpStatusCode.ToString+#13#13+ AHttpResult);

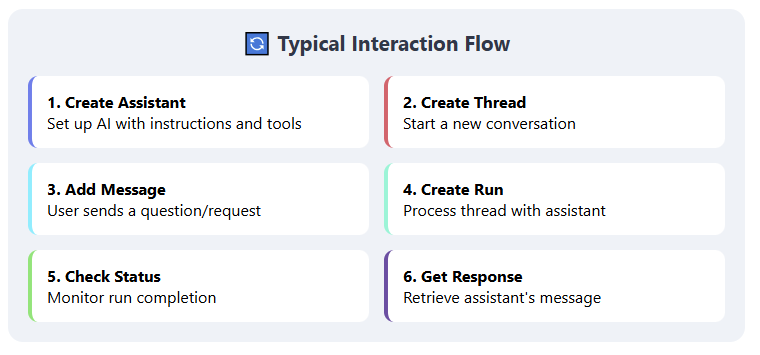

end;OpenAI assistants

if TMSMCPCloudAI1.Service = aiOpenAI then

begin

// if an assistant exists, use it, if not create a new assistant

TMSMCPCloudAI1.GetAssistants(procedure(AResponse: TTMSMCPCloudAIResponse; AHttpStatusCode: Integer; AHttpResult: string)

var

id: string;

begin

if AHttpStatusCode div 100 = 2 then

begin

if TMSMCPCloudAI1.Assistants.Count > 0 then

begin

id := TMSMCPCloudAI1.Assistants[0].ID;

RunThread(id);

end

else

TMSMCPCloudAI1.CreateAssistant('My assistant','you assist me with files',[aitFileSearch], procedure(const AID: string)

begin

id := AID;

RunThread(id);

end);

end;

end);

end;

procedure TForm1.RunThread(id: string);

begin

// create a new thread

TMSMCPCloudAI1.CreateThread(procedure(const AId: string)

var

sl: TStringList;

i: integer;

threadid: string;

begin

threadid := aid;

// create a list of file IDs found to add these to the message for the thread to run with the assistant

sl := TStringList.Create;

for i := 0 to TMSMCPCloudAI1.Files.Count - 1 do

sl.Add(TMSMCPCloudAI1.Files[i].ID);

TMSMCPCloudAI1.CreateMessage(ThreadId, 'user', Memo1.Lines.Text, sl, aitFileSearch, procedure(const AId: string)

begin

// message created, now run the thread and wait for it to complete

TMSMCPCloudAI1.RunThreadAndWait(ThreadId, id, procedure(AResponse: TTMSMCPCloudAIResponse; AHttpStatusCode: Integer; AHttpResult: string)

begin

// when the thread run completed, the LLM returned a response

memo2.Lines.Add(AHttpResult);

btnExec.Enabled := true;

progressbar1.State := pbsPaused;

end);

end,

procedure(AResponse: TTMSMCPCloudAIResponse; AHttpStatusCode: Integer; AHttpResult: string)

begin

memo2.Lines.Add('Error ' + AHttpStatusCode.ToString);

memo2.Lines.Add(AHttpResult);

btnExec.Enabled := true;

progressbar1.State := pbsPaused;

end

);

sl.Free;

end);

end;

Conclusion

This new functionality for using files with the cloud LLM is available in TMS AI Studio. If you have an active TMS ALL-ACCESS license, you have access to TMS AI Studio that uses the TTMSMCPCloudAI component but also has everything on board to let you build MCP servers and clients.

Bruno Fierens

Related Blog Posts

-

Add AI superpower to your Delphi & C++Builder apps part 1

-

Add AI superpower to your Delphi & C++Builder apps part 2: function calling

-

Add AI superpower to your Delphi & C++Builder apps part 3: multimodal LLM use

-

Add AI superpower to your Delphi & C++Builder apps part 4: create MCP servers

-

Add AI superpower to your Delphi & C++Builder apps part 5: create your MCP client

-

Add AI superpower to your Delphi & C++Builder apps part 6: RAG

-

Introducing TMS AI Studio: Your Complete AI Development Toolkit for Delphi

-

Automatic invoice data extraction in Delphi apps via AI

-

AI based scheduling in classic Delphi desktop apps

-

Voice-Controlled Maps in Delphi with TMS AI Studio + OpenAI TTS/STT

-

Creating an n8n Workflow to use a Logging MCP Server

-

Supercharging Delphi Apps with TMS AI Studio v1.2 Toolsets: Fine-Grained AI Function Control

-

AI-powered HTML Reports with Embedded Browser Visualization

-

Additional audio transcribing support in TMS AI Studio v1.2.3.0 and more ...

-

Introducing Attributes Support for MCP Servers in Delphi

-

Using AI Services securely in TMS AI Studio

-

Automate StellarDS database operations with AI via MCP

-

TMS AI Studio v1.4 is bringing HTTP.sys to MCP

-

Windows Service Deployment Guide for the HTTP.SYS-Ready MCP Server Built with TMS AI Studio

This blog post has received 2 comments.

2. Wednesday, July 23, 2025 at 2:35:30 PM

Sorry, at this moment, Azure AI search is not supported.

2. Wednesday, July 23, 2025 at 2:35:30 PM

Sorry, at this moment, Azure AI search is not supported.Bruno Fierens

All Blog Posts | Next Post | Previous Post

Ron