Blog

All Blog Posts | Next Post | Previous Post

Add AI superpower to your Delphi & C++Builder apps part 1

Add AI superpower to your Delphi & C++Builder apps part 1

Wednesday, May 21, 2025

Image generated by OpenAI

Many software developers view AI primarily as a tool to boost productivity and improve their software development skills. But there’s another powerful angle: using AI to enhance and extend the functionality of the applications we build. That’s exactly what we’ve been exploring over the past year—specifically from the perspective of Delphi and C++Builder developers. In this blog series, we’ll walk you through, step by step, the tools and techniques we’ve already developed for you to leverage. At the same time, we hope to spark a lively discussion and inspire new ideas about how AI can help us build software with functionality that feels out of this world. In this first article, we’ll start with a small but essential step: how to use LLMs from within your applications. In the next part, we’ll dive into LLM function calling and how it can enable deep integrations with your apps.

Integrating LLMs via REST API with TMS AI Studio's TTMSMCPCloudAI Component

The surge in Large Language Models (LLMs) such as ChatGPT, Claude, Mistral, and others has revolutionized how applications interact with language. Whether you want to translate content, summarize data, or build intelligent assistants, these models provide a consistent and scalable interface through REST APIs.

In this article, we’ll explore how you can abstractly and efficiently work with a wide range of LLM services—like OpenAI, DeepSeek, Claude, Gemini, Perplexity, Grok, Ollama, Mistral —using the powerful TTMSMCPCloudAI component from TMS AI Studio.

A Unified Approach to LLM Integration

Each cloud LLM provider offers slightly different API semantics, authentication methods, and payload structures. However, at a higher level, their interfaces boil down to a simple pattern:

-

Send text input ("prompt")

-

Receive generated text response ("completion")

This is commonly referred to as the Completion REST API.

With TTMSMCPCloudAI, you don’t need to worry about low-level details. The component abstracts away REST communication, authentication, and request building, so you can focus purely on integrating language capabilities into your app.

Supported AI services include at this moment:

OpenAI (ChatGPT)

Leading AI research and deployment company, creator of GPT-4 and ChatGPT.-

Gemini (Google AI)

Google's flagship multimodal AI family developed by Google DeepMind. -

Claude (Anthropic)

Anthropic’s family of helpful, harmless, and honest AI assistants. -

Grok (xAI)

AI developed by Elon Musk’s xAI, integrated with X (formerly Twitter). -

DeepSeek

Open-source and commercial LLMs focused on code and reasoning tasks. -

Mistral

European AI company offering open-weight LLMs like Mistral and Mixtral. -

Ollama

A local runtime for running LLMs on your own machine with a simple CLI. -

Perplexity AI

An AI-powered answer engine combining web search and conversational AI.

Common Use Cases

You can use these models for a variety of natural language processing tasks:

-

Translation between languages

-

Summarization of documents or datasets

-

Information retrieval from user input

-

Conversational agents and chatbots

-

And much more you'll uncover in the next article on function calling!

All these use cases follow the same underlying pattern: send context → receive a response. This makes them perfect candidates for abstraction.

Step-by-Step Integration Workflow

The typical workflow using the TTMSMCPCloudAI component looks like this:

-

Request an API key from the LLM provider (e.g., OpenAI, Anthropic).

-

Select your model (e.g.,

gpt-4,claude-3-7-sonnet,mistral-medium). -

Set the prompt context, and optionally the system role (instructions for the model’s behavior).

-

Send the request via HTTPS POST.

-

Receive and process the generated response text.

All of this is encapsulated cleanly by the component, making it straightforward to switch between providers or models.

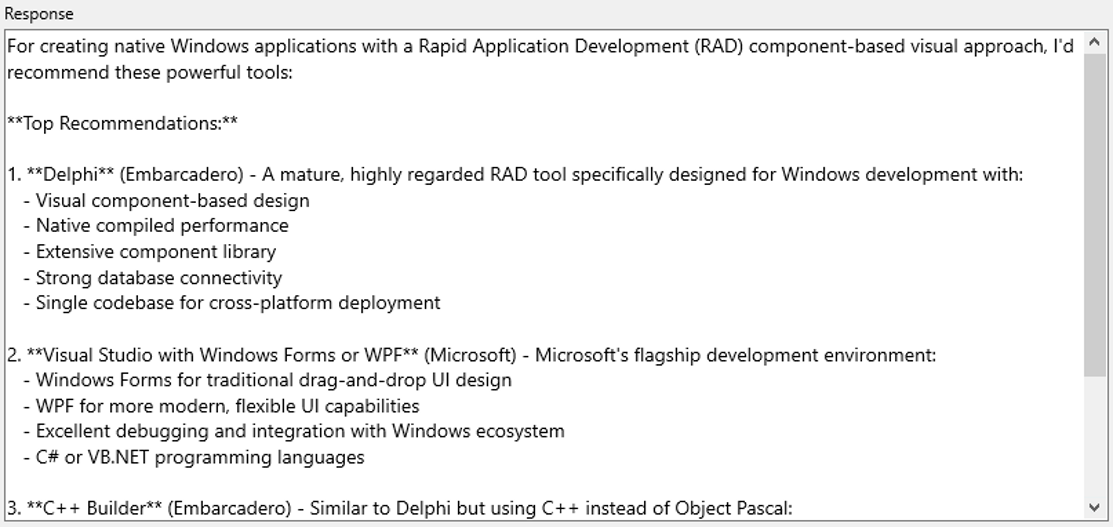

Code Example: Ask Claude About Development Tools

Here's a practical example of how to call Anthropic's Claude model using TTMSMCPCloudAI in a Delphi or C++Builder application:

Once Execute is called, the request is sent, and the response text will be available via the result event or property for further use in your application.

And of course it is reassuring to see that the LLM responds with Delphi as its first recommendation 😉:

Why Use TTMSMCPCloudAI?

- Rapid Development: Eliminate the boilerplate of REST clients and JSON handling.

- Flexibility: Switch between LLM providers without rewriting logic.

- RAD Integration: Seamlessly integrates into the Delphi or C++Builder apps built for Windows, macOS, Linux, iOS, Android.

- Cross-platform: Build once, deploy anywhere.

Get started

As LLMs become increasingly critical in building intelligent applications, having a robust and unified abstraction like TTMSMCPCloudAI allows you to stay focused on the logic and user experience—not the plumbing.

In upcoming articles, we’ll dive deeper into function calling, RAG, agents, MCP servers & clients.

If you have an active TMS ALL-ACCESS license, you have access to the TMS AI Studio that uses the TTMSMCPCloudAI component but also has everything on board to let you build MCP servers and clients.

Bruno Fierens

Related Blog Posts

-

Add AI superpower to your Delphi & C++Builder apps part 1

-

Add AI superpower to your Delphi & C++Builder apps part 2: function calling

-

Add AI superpower to your Delphi & C++Builder apps part 3: multimodal LLM use

-

Add AI superpower to your Delphi & C++Builder apps part 4: create MCP servers

-

Add AI superpower to your Delphi & C++Builder apps part 5: create your MCP client

-

Add AI superpower to your Delphi & C++Builder apps part 6: RAG

-

Introducing TMS AI Studio: Your Complete AI Development Toolkit for Delphi

-

Automatic invoice data extraction in Delphi apps via AI

-

AI based scheduling in classic Delphi desktop apps

-

Voice-Controlled Maps in Delphi with TMS AI Studio + OpenAI TTS/STT

-

Creating an n8n Workflow to use a Logging MCP Server

-

Supercharging Delphi Apps with TMS AI Studio v1.2 Toolsets: Fine-Grained AI Function Control

-

AI-powered HTML Reports with Embedded Browser Visualization

-

Additional audio transcribing support in TMS AI Studio v1.2.3.0 and more ...

-

Introducing Attributes Support for MCP Servers in Delphi

-

Using AI Services securely in TMS AI Studio

-

Automate StellarDS database operations with AI via MCP

-

TMS AI Studio v1.4 is bringing HTTP.sys to MCP

-

Windows Service Deployment Guide for the HTTP.SYS-Ready MCP Server Built with TMS AI Studio

-

Extending AI Image Capabilities in TMS AI Studio v1.5.0.0

-

Try the Free TMS AI Studio RAG App

This blog post has received 12 comments.

2. Tuesday, June 10, 2025 at 10:59:01 AM

The Context can be emptied to start a new conversation or kept to continue a conversation.

2. Tuesday, June 10, 2025 at 10:59:01 AM

The Context can be emptied to start a new conversation or kept to continue a conversation.

Bruno Fierens

3. Tuesday, June 10, 2025 at 5:21:31 PM

Does this mean that the user messages and the responses from LLM are NOT interleaved?

3. Tuesday, June 10, 2025 at 5:21:31 PM

Does this mean that the user messages and the responses from LLM are NOT interleaved?In other words, the history of user messages are just one after the other in the Context (i.e. TStrings) and sent to LLM as is?

Joe

4. Tuesday, June 10, 2025 at 9:39:40 PM

Yes

4. Tuesday, June 10, 2025 at 9:39:40 PM

Yes

Bruno Fierens

5. Tuesday, June 10, 2025 at 9:58:50 PM

Bruno, this is a problem.

5. Tuesday, June 10, 2025 at 9:58:50 PM

Bruno, this is a problem.I''ve tested something I was doing directly with OpenAI API (via delphi open source libraries) - where I send the history of the system, user and assistant messages. I send back in the same ORDER. Then I used your TTMSFNCCloudAI component. It makes a difference. The LLM needs to know the order of the messages and also each message role. Without the order and role, the application I''m developing doesn''t work.

I very much would like to purchase your component (multiple services, better support etc).

It''s my opinion that grouping all user messages together will LIMIT the effectiveness of the LLM.

Joe

6. Tuesday, June 10, 2025 at 10:06:24 PM

We will investigate and will most likely be able to extend it to make it different messages and keep the message category for every message.

6. Tuesday, June 10, 2025 at 10:06:24 PM

We will investigate and will most likely be able to extend it to make it different messages and keep the message category for every message.

Bruno Fierens

7. Saturday, July 12, 2025 at 10:31:06 PM

Hi Bruno,

7. Saturday, July 12, 2025 at 10:31:06 PM

Hi Bruno,Just curious if you and your team will make TTMSFNCCloudAI handle the order and type of message (User, Assistant, System etc)?

Wondering what the progress is on that?

Joe

8. Sunday, July 13, 2025 at 11:04:56 AM

There is TTMSFNCCloudAI.AssistantRole and TTMSFNCCloudAI.SystemRole to add Assistant and System messages. The user messages are added via TTMSFNCCloudAI.Context.

8. Sunday, July 13, 2025 at 11:04:56 AM

There is TTMSFNCCloudAI.AssistantRole and TTMSFNCCloudAI.SystemRole to add Assistant and System messages. The user messages are added via TTMSFNCCloudAI.Context.

Bruno Fierens

9. Monday, August 11, 2025 at 12:34:43 PM

TMS AI makes online education international by letting teachers and students speak and understand each other in their own languages using text-to-speech and speech-to-text. It also allows company websites to be available in many languages and lets users control software with voice commands. It’s truly amazing.

9. Monday, August 11, 2025 at 12:34:43 PM

TMS AI makes online education international by letting teachers and students speak and understand each other in their own languages using text-to-speech and speech-to-text. It also allows company websites to be available in many languages and lets users control software with voice commands. It’s truly amazing.

Mehrdad Esmaili

10. Tuesday, August 12, 2025 at 3:50:25 PM

Is there an update to the problem raised by Joe? It seems like a showstopper. Having a context which is just a TStrings isn''t going to work and goes against how the API is supposed to be used. See for example https://platform.openai.com/docs/api-reference/chat/create: the messages by the user and by the system need to be kept separate. This also enables going back into the history in case the end user wants to edit their earlier question. Also noticed that all examples (even the ClientDemo) completely reset the context each time.

10. Tuesday, August 12, 2025 at 3:50:25 PM

Is there an update to the problem raised by Joe? It seems like a showstopper. Having a context which is just a TStrings isn''t going to work and goes against how the API is supposed to be used. See for example https://platform.openai.com/docs/api-reference/chat/create: the messages by the user and by the system need to be kept separate. This also enables going back into the history in case the end user wants to edit their earlier question. Also noticed that all examples (even the ClientDemo) completely reset the context each time.

Wijnnobel Wilfred

11. Tuesday, August 12, 2025 at 4:27:34 PM

You can separately add assistant & system context via TTMSFNCCloudAI.SystemRole and TTMSFNCCloudAI.AssistantRole properties, separate from user messages that are set via TTMSFNCCloudAI.Context.

11. Tuesday, August 12, 2025 at 4:27:34 PM

You can separately add assistant & system context via TTMSFNCCloudAI.SystemRole and TTMSFNCCloudAI.AssistantRole properties, separate from user messages that are set via TTMSFNCCloudAI.Context.

Bruno Fierens

12. Friday, August 15, 2025 at 9:04:53 AM

Thanks, but I feel like you misunderstood the question. It''s not about those role properties. See below for an example on how the context should be sent in case of OpenAI. This is not possible right now with the component, is it? This is the showstopper.

12. Friday, August 15, 2025 at 9:04:53 AM

Thanks, but I feel like you misunderstood the question. It''s not about those role properties. See below for an example on how the context should be sent in case of OpenAI. This is not possible right now with the component, is it? This is the showstopper."messages": [

{

"role": "system",

"content": "You are helpful assistant."

},

{

"role": "user",

"content": "Good morning!"

},

{

"role": "assistant",

"content": "Good morning! How can I assist you today?"

},

{

"role": "user",

"content": "I just needed your reply to get a messages example for the OpenAI completions API"

},

{

"role": "assistant",

"content": "I see! No problem."

},

{

"role": "user",

"content": "Thanks"

}

]

Wijnnobel Wilfred

All Blog Posts | Next Post | Previous Post

Does the component automatically add "user" prompts to the history?

Joe