Blog

All Blog Posts | Next Post | Previous Post

Take seamlessly advantage of different AI LLM's from your Delphi apps

Take seamlessly advantage of different AI LLM's from your Delphi apps

Thursday, January 9, 2025

We are thrilled to announce the release of the new TTMSFNCCloudAI component, available in the TMS FNC Cloud Pack for Delphi developers. This new addition empowers Delphi developers to seamlessly integrate and interact with Large Language Models (LLMs) in their applications, all while leveraging the power and flexibility of the FNC to power VCL Windows, FMX cross-platform and TMS WEB Core web apps.

Supported LLMs

The TTMSFNCCloudAI component supports a variety of state-of-the-art LLMs, including:

- OpenAI: Integrate with models like GPT-4 and GPT-3.5.

- Claude by Anthropic: Use Claude's conversational AI capabilities.

- Gemini by Google: Tap into the capabilities of Gemini’s cutting-edge models.

- Grok by x.ai: A robust choice for complex AI-driven interactions.

- Perplexity: Designed for advanced question answering and information retrieval.

- Ollama: Local LLM integration for privacy-focused or offline applications.

What is TTMSFNCCloudAI?

The TTMSFNCCloudAI component enables you to:

- Send prompts to your chosen LLM.

- Retrieve and process responses seamlessly.

- Customize model settings such as temperature, model type, and other parameters.

- Handle advanced conversation features, such as setting user and system roles.

Its intuitive design simplifies the process of embedding LLM capabilities into Delphi-based applications, whether you are building chatbots, summarization tools, code generators, or anything in between.

How Does It Work?

The component provides a unified API to interact with different LLMs. Developers can configure API keys, select the desired model, set parameters, and handle responses through well-defined events. 100% identical code can be used to work with different LLM's. There is only one property to change to select another LLM.

Here’s a basic example of how to use TTMSFNCCloudAI:

procedure TForm1.BtnExecuteClick(Sender: TObject);

begin

TMSFNCCloudAI1.Service := aiOpenAI; // Select OpenAI service

TMSFNCCloudAI1.APIKeys.OpenAI := 'your_openai_api_key';

TMSFNCCloudAI1.Settings.OpenAIModel := 'gpt-4';

TMSFNCCloudAI1.Context.Text := 'What are the key benefits of using TMS FNC Cloud AI?';

TMSFNCCloudAI1.OnExecuted := DoAIResponse;

TMSFNCCloudAI1.Execute;

end;

procedure TForm1.DoAIResponse(Sender: TObject; AResponse: TTMSFNCCloudAIResponse; AHttpStatusCode: Integer; AHttpResult: string);

begin

if AHttpStatusCode = 200 then

ShowMessage('AI Response: ' + AResponse.Content.Text)

else

ShowMessage('Error: ' + AHttpResult);

end;

Some examples

Here are some examples on how we used the different LLMs for different tasks, going from writing Object Pascal code, producing HTML/CSS pages to translating a poem from English to German.

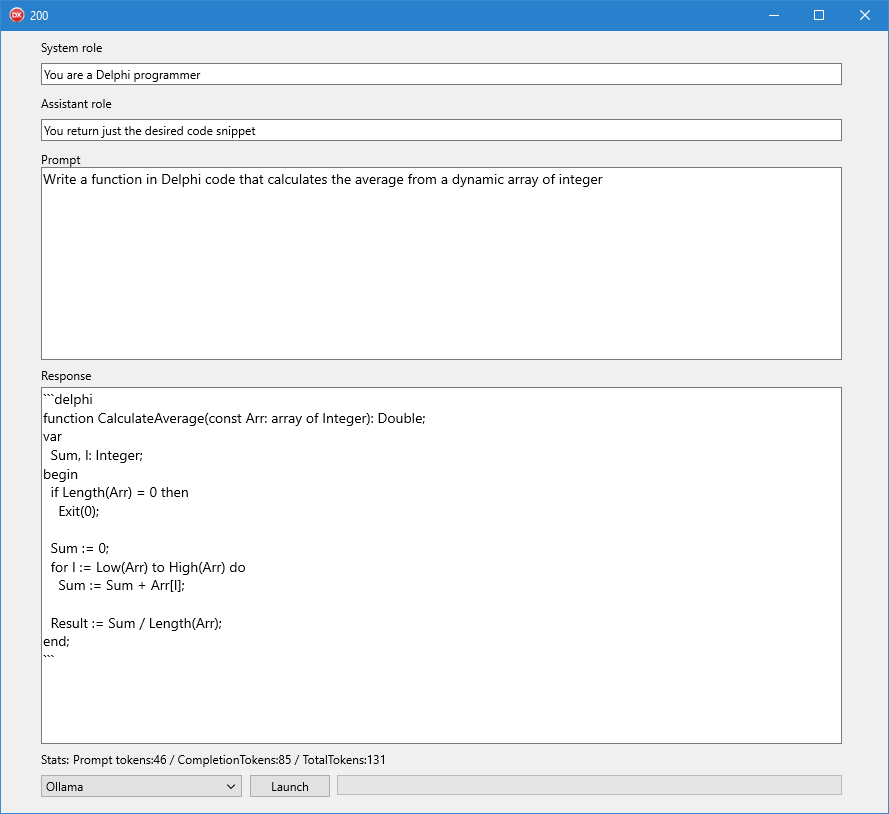

Delphi code generation

The classic example. Use the system & assistant role to specify that you want Object Pascal code to be returned and the prompt describes what the code should do:

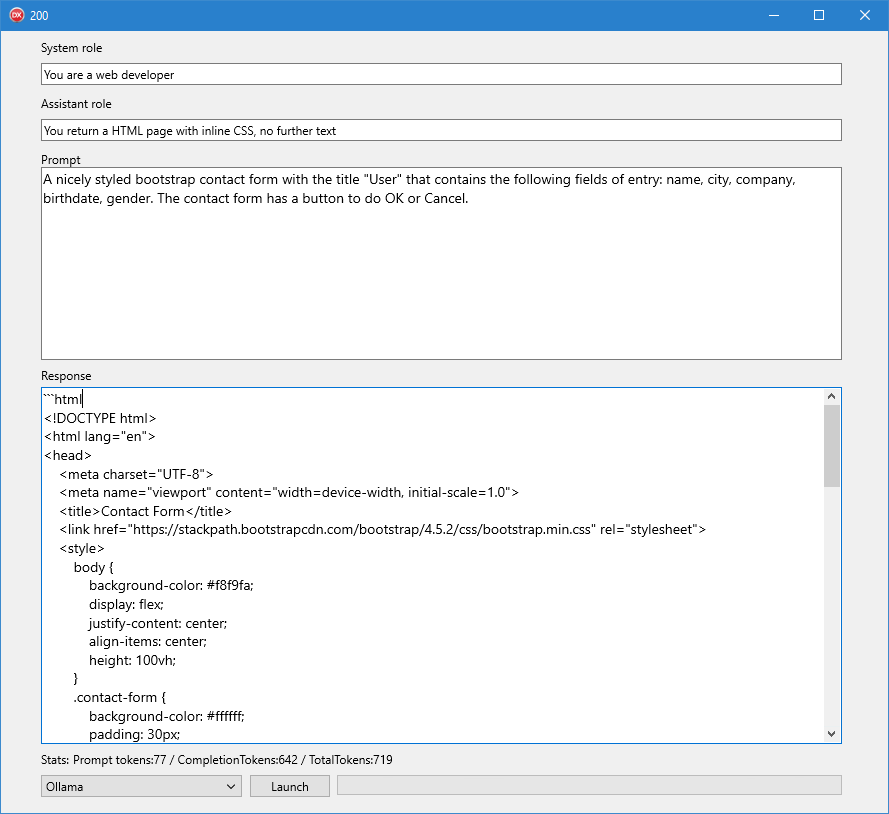

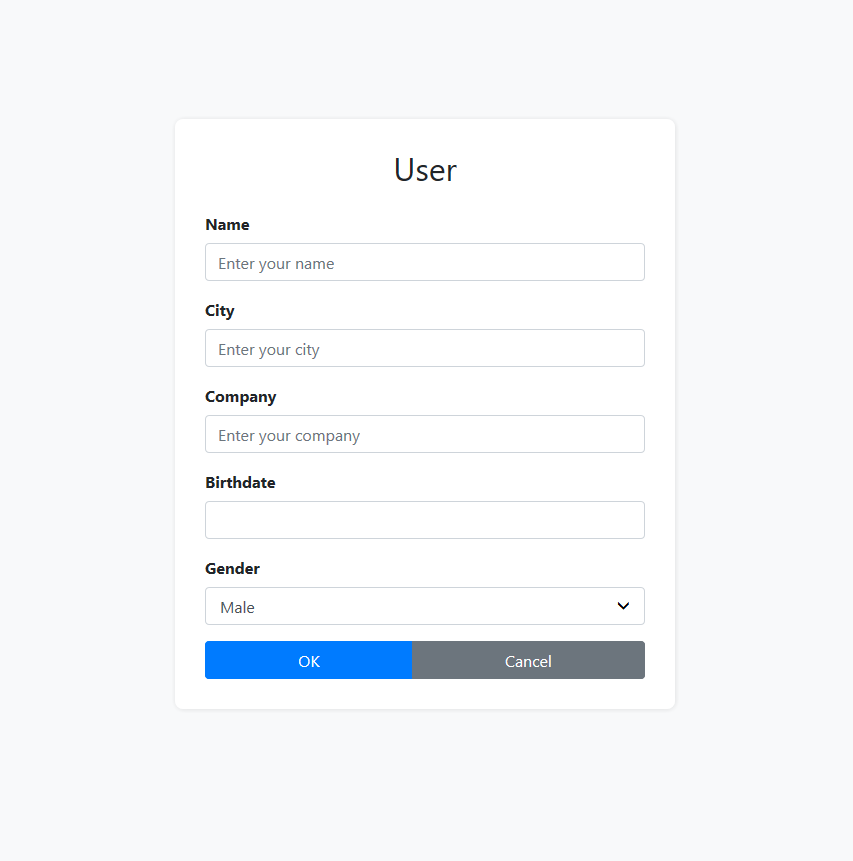

Web pages (HTML/CSS) generation

Another classc.

Result:

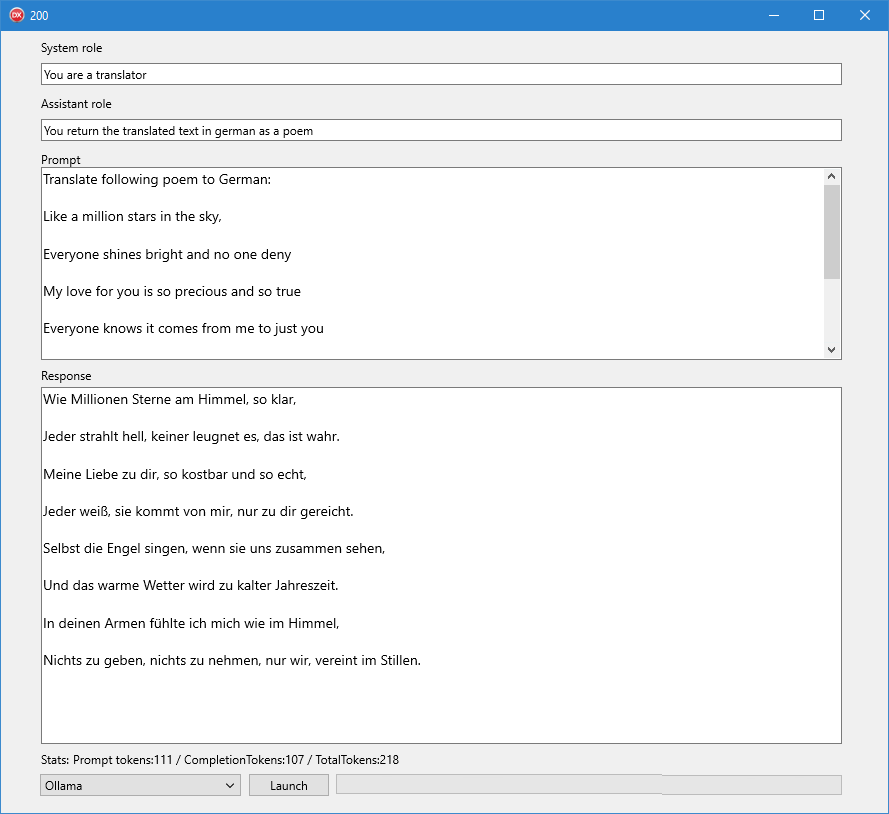

Translation

In this example, we ask the LLM to translate a poem from English to German and of course, we expect the resulting translated text to rhyme as well

Future Extensions

The TTMSFNCCloudAI component is just the beginning. In upcoming updates, we plan to introduce:

- Real-time Streaming Responses: Ideal for chat applications and progress monitoring.

- Integration with More AI Services: Expanding support for new and emerging AI platforms.

- Fine-tuning Models: Enable fine-tuning for domain-specific tasks.

- Enhanced Analytics: Token usage tracking, cost estimation, and response optimization tools.

- Your ideas: Let us know what your wild ideas are to leverage the power of AI in your Delphi apps?

Get Started Today!

Don’t miss the opportunity to elevate your Delphi applications with the power of AI. With TTMSFNCCloudAI, integrating LLM capabilities has never been easier.

Learn More and Download the Latest TMS FNC Cloud Pack

Empower your applications with the intelligence of the future – all from the comfort of your Delphi IDE!

Bruno Fierens

This blog post has received 1 comment.

All Blog Posts | Next Post | Previous Post

But I would welcome an example where with Ollama I can gradually develop my original topic from the first question, through the second and so on. All in an uninterrupted topic, when we are still moving closer to the ideal. In this case, restoring the context every time is inappropriate.

It seems that the call to TTMSFNCCloudAI automatically starts another thread again without a sequel.

But LLM can hold the context and make it easier for us to do more extensive work. That is, unless we implicitly tell it to interrupt the thread. It seems that the repeated restart of questions from scratch is a function of the TTMSFNCCloudAI component.

For example, in the Power Shell console I always communicate the same topic until I enter \bye.

Or unless I explicitly request a change of topic within the dialogue. Even then, the module still flashes certain information from the previous topic. Because it has continuity with the previous context

Do you have any modified examples?

I can''t achieve this myself.

PowerShell and Ollama work perfectly for me. I would like to adopt it in Delphi as well.

Miro

Bal? Miroslav